pip install --q tomotopy pyLDAvis pyvis seabornNote: you may need to restart the kernel to use updated packages.March 5, 2024

A chat with a colleague had me thinking about topic modeling. I haven’t tried it in a while. I’ve primarily used Gensim in the past, but I’ve always wanted to try a library that used Gibbs sampling, which is rumored to produce more coherent topics. Installing Java every few months is a pain, so I’ve shied away from Mallet, which I suspect is the best tool. Instead, I thought I would try tomotopy which uses Gibbs sampling and supports most of the major variants of topic modeling, such as correlated and dynamic topic models.

Note: you may need to restart the kernel to use updated packages.My sample dataset is ten thousand abstracts from recent sociology articles.

df = pd.read_json(

"https://raw.githubusercontent.com/nealcaren/notes/main/posts/abstracts/sociology-abstracts.json"

)

len(df)9797| DOI | Source title | Year | Authors | Title | Abstract | |

|---|---|---|---|---|---|---|

| 2790 | 10.1002/symb.209 | Symbolic Interaction | 2016 | Ivana G.-I. | Face and the dynamics of its construction: A r... | This article proposes a new understanding of t... |

| 9693 | 10.1177/0049124120926214 | Sociological Methods and Research | 2023 | Cheng S. | How to Borrow Information From Unlinked Data? ... | One of the most important developments in the ... |

| 3676 | 10.1177/0049124115591013 | Sociological Methods and Research | 2017 | Piccarreta R. | Joint Sequence Analysis: Association and Clust... | In its standard formulation, sequence analysis... |

First step is to build a corpus where each text is tokenized list of words, minus punctuation and stopwords.

nltk.download('stopwords')

def create_stopwords(bonus_stops=[]):

english_stops = list(set(nltk_stopwords.words("english")))

english_stops.extend(bonus_stops)

return english_stops

def create_tokenizer(stopwords):

pat = re.compile("^[a-z]{2,}$")

return lambda x: x in stopwords or not pat.match(x)

def preprocess_corpus(texts, stopwords):

corpus = tp.utils.Corpus(

tokenizer=tp.utils.SimpleTokenizer(),

stopwords=create_tokenizer(stopwords),

)

corpus.process(d.lower() for d in texts)

return corpus

stopwords = create_stopwords()

corpus = preprocess_corpus(df["Abstract"].values, stopwords)For starters, I’m going to use a vanilla LDAModel with 20 topics (k=20), excluding words that are in less than 25 documents (min_df=25) or that are among the top 20 most frequent words (rm_top=20) and using the default alpha and eta values.

mdl = tp.LDAModel(min_df=25, rm_top=20, k=20, corpus=corpus)

mdl.train(0)

print(

"Num docs:{}, Num Vocabs:{}, Total Words:{}".format(

len(mdl.docs), len(mdl.used_vocabs), mdl.num_words

)

)

print("Removed Top words: ", *mdl.removed_top_words)

# Let's train the model

mdl.train(1000, show_progress=True)Num docs:9797, Num Vocabs:4055, Total Words:779364

Removed Top words: social data study research women using health article results among gender children racial work findings find also family effects use

Iteration: 0%| | 0/1000 [00:00<?, ?it/s]

Iteration: 100%|██████████| 1000/1000 [00:22<00:00, 45.21it/s, LLPW: -7.911747]<Basic Info>

| LDAModel (current version: 0.12.7)

| 9797 docs, 779364 words

| Total Vocabs: 27554, Used Vocabs: 4055

| Entropy of words: 7.66138

| Entropy of term-weighted words: 7.66138

| Removed Vocabs: social data study research women using health article results among gender children racial work findings find also family effects use

|

<Training Info>

| Iterations: 1000, Burn-in steps: 0

| Optimization Interval: 10

| Log-likelihood per word: -7.91053

|

<Initial Parameters>

| tw: TermWeight.ONE

| min_cf: 0 (minimum collection frequency of words)

| min_df: 25 (minimum document frequency of words)

| rm_top: 20 (the number of top words to be removed)

| k: 20 (the number of topics between 1 ~ 32767)

| alpha: [0.1] (hyperparameter of Dirichlet distribution for document-topic, given as a single `float` in case of symmetric prior and as a list with length `k` of `float` in case of asymmetric prior.)

| eta: 0.01 (hyperparameter of Dirichlet distribution for topic-word)

| seed: 2345699557 (random seed)

| trained in version 0.12.7

|

<Parameters>

| alpha (Dirichlet prior on the per-document topic distributions)

| [0.74950975 0.15333296 0.06660293 0.0614165 0.08869717 0.11870419

| 0.10664396 0.10327947 0.10891502 0.04644565 0.12843344 0.09266509

| 0.08365667 0.16978636 0.08694994 0.22936496 0.29218492 0.5656968

| 0.05454639 0.12801994]

| eta (Dirichlet prior on the per-topic word distribution)

| 0.01

|

<Topics>

| #0 (145429) : theory analysis new sociology process

| #1 (34550) : model models approach analysis measures

| #2 (21499) : marriage fertility couples marital birth

| #3 (14804) : attitudes religious political religion toward

| #4 (25473) : school students education educational college

| #5 (29031) : black white race ethnic american

| #6 (25444) : network networks ties trust members

| #7 (26005) : labor workers market employment job

| #8 (29319) : political movement collective movements organizations

| #9 (12103) : immigrants migration immigrant immigration migrants

| #10 (35722) : life mental young associated adults

| #11 (22652) : neighborhood urban neighborhoods segregation housing

| #12 (15834) : online survey information respondents digital

| #13 (36572) : state police policy legal public

| #14 (28487) : parents mothers child families time

| #15 (59056) : income inequality changes across time

| #16 (66262) : cultural interviews identity culture practices

| #17 (110822) : whether effect status associated relationship

| #18 (13597) : men sexual sex violence gendered

| #19 (26703) : economic countries global environmental state

|

The standard way to inspect the model is a pyldavis visualization. The function below prepares all the neccesary fields.

def prepare_data_for_pyldavis(mdl):

"""

Prepares data from a tomotopy LDA model for visualization with pyLDAvis.

Args:

- mdl: A tomotopy LDA model instance.

Returns:

- prepared_data: A pyLDAvis.PreparedData object ready for visualization.

"""

# Extract topic-term distributions

topic_term_dists = np.stack([mdl.get_topic_word_dist(k) for k in range(mdl.k)])

# Extract document-topic distributions and normalize

doc_topic_dists = np.stack([doc.get_topic_dist() for doc in mdl.docs])

doc_topic_dists /= doc_topic_dists.sum(axis=1, keepdims=True)

# Extract document lengths

doc_lengths = np.array([len(doc.words) for doc in mdl.docs])

# Extract vocabulary and term frequency

vocab = list(mdl.used_vocabs)

term_frequency = mdl.used_vocab_freq

# Prepare data for pyLDAvis

prepared_data = pyLDAvis.prepare(

topic_term_dists,

doc_topic_dists,

doc_lengths,

vocab,

term_frequency,

start_index=0, # tomotopy starts topic ids with 0, pyLDAvis with 1

sort_topics=False, # IMPORTANT: otherwise the topic_ids between pyLDAvis and tomotopy are not matching!

)

return prepared_data

prepared_data = prepare_data_for_pyldavis(mdl)

pyLDAvis.display(prepared_data)The standard measure of goodness of fit are coherence scores, but they can really only be used to compare models that are similar in nature. For example, you can use coherence scores to compare two topic models with different topics on the same dataset, but you can’t use them to evaluate sa single run or across datasets.

def calculate_coherence_scores(model):

coherence_results = {"topics": model.k}

presets = ["u_mass", "c_uci", "c_npmi", "c_v"]

for preset in presets:

coh = tp.coherence.Coherence(model, coherence=preset)

average_coherence = coh.get_score()

coherence_results[preset] = average_coherence

return coherence_results

calculate_coherence_scores(mdl){'topics': 20,

'u_mass': -2.1699763125234797,

'c_uci': 0.6845388528655324,

'c_npmi': 0.09235792115864401,

'c_v': 0.6308152285590767}For round two, I want to treat some word combinations, such as “monte carlo” as single words.

cands = corpus.extract_ngrams(

min_cf=20, min_df=10, max_len=5, max_cand=50, normalized=True

)

for cand in cands:

print(cand)tomotopy.label.Candidate(words=["monte","carlo"], name="", score=0.997176)

tomotopy.label.Candidate(words=["et","al"], name="", score=0.988041)

tomotopy.label.Candidate(words=["vice","versa"], name="", score=0.975946)

tomotopy.label.Candidate(words=["assortative","mating"], name="", score=0.973394)

tomotopy.label.Candidate(words=["los","angeles"], name="", score=0.969850)

tomotopy.label.Candidate(words=["du","bois"], name="", score=0.962124)

tomotopy.label.Candidate(words=["depressive","symptoms"], name="", score=0.956196)

tomotopy.label.Candidate(words=["nationally","representative"], name="", score=0.953218)

tomotopy.label.Candidate(words=["san","francisco"], name="", score=0.942331)

tomotopy.label.Candidate(words=["united","states"], name="", score=0.941290)

tomotopy.label.Candidate(words=["hurricane","katrina"], name="", score=0.935906)

tomotopy.label.Candidate(words=["exponential","random","graph"], name="", score=0.913708)

tomotopy.label.Candidate(words=["erving","goffman"], name="", score=0.878780)

tomotopy.label.Candidate(words=["participant","observation"], name="", score=0.874644)

tomotopy.label.Candidate(words=["skin","tone"], name="", score=0.873090)

tomotopy.label.Candidate(words=["formerly","incarcerated"], name="", score=0.863448)

tomotopy.label.Candidate(words=["exponential","random"], name="", score=0.860323)

tomotopy.label.Candidate(words=["random","graph"], name="", score=0.852178)

tomotopy.label.Candidate(words=["north","carolina"], name="", score=0.849468)

tomotopy.label.Candidate(words=["propensity","score"], name="", score=0.848840)

tomotopy.label.Candidate(words=["per","capita"], name="", score=0.842935)

tomotopy.label.Candidate(words=["twentieth","century"], name="", score=0.841853)

tomotopy.label.Candidate(words=["supreme","court"], name="", score=0.835858)

tomotopy.label.Candidate(words=["great","recession"], name="", score=0.830242)

tomotopy.label.Candidate(words=["ordinary","least","squares"], name="", score=0.829869)

tomotopy.label.Candidate(words=["upwardly","mobile"], name="", score=0.826883)

tomotopy.label.Candidate(words=["real","estate"], name="", score=0.818797)

tomotopy.label.Candidate(words=["propensity","score","matching"], name="", score=0.817808)

tomotopy.label.Candidate(words=["shed","light"], name="", score=0.816881)

tomotopy.label.Candidate(words=["least","squares"], name="", score=0.814131)

tomotopy.label.Candidate(words=["randomly","assigned"], name="", score=0.805640)

tomotopy.label.Candidate(words=["latin","america"], name="", score=0.802872)

tomotopy.label.Candidate(words=["exponential","random","graph","models"], name="", score=0.802133)

tomotopy.label.Candidate(words=["logistic","regression"], name="", score=0.801361)

tomotopy.label.Candidate(words=["criminal","justice"], name="", score=0.788675)

tomotopy.label.Candidate(words=["life","course"], name="", score=0.788353)

tomotopy.label.Candidate(words=["sheds","light"], name="", score=0.782329)

tomotopy.label.Candidate(words=["multinomial","logistic"], name="", score=0.780178)

tomotopy.label.Candidate(words=["video","abstract"], name="", score=0.778813)

tomotopy.label.Candidate(words=["tea","party"], name="", score=0.778165)

tomotopy.label.Candidate(words=["wide","range"], name="", score=0.774173)

tomotopy.label.Candidate(words=["psychological","distress"], name="", score=0.769570)

tomotopy.label.Candidate(words=["score","matching"], name="", score=0.762304)

tomotopy.label.Candidate(words=["intensive","mothering"], name="", score=0.761763)

tomotopy.label.Candidate(words=["educational","attainment"], name="", score=0.761300)

tomotopy.label.Candidate(words=["paternity","leave"], name="", score=0.761110)

tomotopy.label.Candidate(words=["personality","traits"], name="", score=0.755425)

tomotopy.label.Candidate(words=["labor","market"], name="", score=0.751363)

tomotopy.label.Candidate(words=["lesbian","gay","bisexual"], name="", score=0.746734)

tomotopy.label.Candidate(words=["structural","equation"], name="", score=0.746566)I also want to add more stopwords based on words that are commonly used in my data but aren’t interesting to analyze.

new_stops = ['one',

'four',

'often',

'three',

'third',

'many',

'despite',

'this',

'approach',

'data',

'rather',

'use',

'second',

'using',

'article',

'also',

'research',

'social',

'new',

'analysis',

'fourth',

'may',

'however',

'argue',

'among',

'first',

'results',

'studies',

'provide',

'two',

'thus',

'has',

'even',

'yet',

'study']Create a new corpus that removes th stop words and combines words to create ngrams.

def extract_ngrams(corpus, min_score=.8, ngram_max=7):

for n in range(ngram_max, 1, -1):

ngrams = corpus.extract_ngrams(min_df=10, max_len=n, normalized=True, min_score=min_score)

corpus.concat_ngrams(ngrams, delimiter="-")

return corpus

stopwords_adj = create_stopwords(new_stops)

corpus_adj = preprocess_corpus(df['Abstract'].values, stopwords_adj)

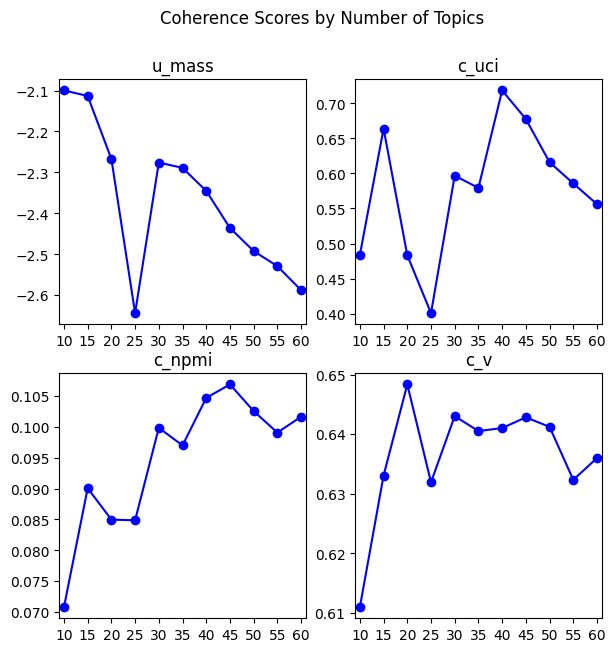

corpus_adj = extract_ngrams(corpus_adj)I asked ChatGPT to generate a loop that would run a topic model for different values of k, storing the model and coherence scores. For fun, I also asked it to plot out the scores as it went along. This took a few iterations, but the final version is more exciting to watch as the various coherence graphs are updated.

import matplotlib.pyplot as plt

from IPython.display import display, clear_output

def train_models(corpus, min_df, topic_range, num_iterations):

coherence_measures = ["u_mass", "c_uci", "c_npmi", "c_v"]

coherence_scores = {

measure: [] for measure in coherence_measures

} # Dictionary to store scores for each measure

coherence_scores['k_values'] = []

models = {}

# Generate the list of topic values from topic_range

k_values = list(range(*topic_range))

# Create a 2x2 grid of subplots

fig, axes = plt.subplots(nrows=2, ncols=2, figsize=(7, 7))

fig.suptitle("Coherence Scores by Number of Topics")

# Flatten the axes array for easy indexing

axes = axes.flatten()

# Set x-axis for each subplot

for ax in axes:

ax.set_xticks(k_values) # Set the x-axis ticks to the topic values

ax.set_xlim(

k_values[0]-1, k_values[-1]+1

) # Set the x-axis limits to cover the full range of topic values

ax.set_xlabel("Number of Topics")

ax.set_ylabel("Coherence Score")

for k_topics in k_values:

model = tp.LDAModel(min_df=min_df, k=k_topics, corpus=corpus)

model.train(num_iterations, show_progress=False)

models[k_topics] = model

coherence_scores['k_values'].append(k_topics)

# Assume calculate_coherence_scores returns a dictionary with scores for each measure

coherence_results = calculate_coherence_scores(model)

for i, measure in enumerate(coherence_measures):

coherence_scores[measure].append(coherence_results[measure])

axes[i].clear() # Clear previous lines

axes[i].plot(

k_values[: len(coherence_scores[measure])],

coherence_scores[measure],

marker="o",

color="b",

) # Plot up to current

axes[i].set_title(measure)

axes[i].set_xticks(k_values) # Reset the x-axis ticks to the topic values

axes[i].set_xlim(k_values[0]-1, k_values[-1]+1) # Reset the x-axis limits

# Update the plots

clear_output(wait=True)

display(fig)

plt.close(fig) # Close the figure to prevent additional empty plots from showing

return coherence_scores, models

coherences, models = train_models(

corpus_adj, min_df=25, topic_range=(10, 61, 5), num_iterations=1000

)

Here’s what ChattGPT said about evaluating the coherence scores:

When examining the coherence scores for different numbers of topics in a topic model, we observe: - U_Mass: This measure favors fewer topics with the highest score at 10 topics (-2.099674), indicating that a smaller set of topics may be more coherent within the documents. - C_UCI: This score reflects the degree of semantic coherence based on pointwise mutual information. The model with 40 topics stands out with the highest C_UCI score (0.718497), suggesting that it captures the strongest semantic relationships between words within the topics. - C_NPMI: Normalized Pointwise Mutual Information ranges from -1 to 1, with higher values indicating more coherent topics. The model with 45 topics has the highest C_NPMI score (0.106887), indicating that the topics are well-defined and each topic is semantically coherent. - C_V: This measure considers both the statistical and semantic coherence of topics. The model with 50 topics achieves the highest C_V score (0.641239), suggesting that it offers the most meaningful and interpretable topics.

In summary, the coherence measures suggest different optimal numbers of topics. The U_Mass score points to 10 topics, C_UCI to 40 topics, C_NPMI to 45 topics, and C_V to 50 topics. The C_V score is often given more weight as it is a good indicator of human interpretability. Therefore, based on the C_V measure, one might consider 50 topics as the optimal choice for this dataset. However, it is important to also consider the specific needs of the application and perform a qualitative review of the topics to ensure they align with the goals of the analysis.

I ended up running this a few different times and never real found consistency across the different measures or even within the same measure for different attempts. So, after inspecting the results, I decided 40 struck the right balance between splitting things without being too microfocused or splitting the same thing into two concepts too frequently.

best_mdl = models[40]

prepared_data = prepare_data_for_pyldavis(best_mdl)

pyLDAvis.display(prepared_data)Next, I wanted to add predicted topic scores to the original dataset. I named the columns after the four most representatives words for each topic.

# Function to get the top words for each topic

def get_top_words(model, top_n=4):

top_words = []

for k in range(model.k):

words = [word for word, _ in model.get_topic_words(k, top_n=top_n)]

top_words.append(words)

return top_words

# Get the top words for each topic

top_words_per_topic = get_top_words(best_mdl)

# Create topic names using the top three words

topic_names = ["_".join(words) for words in top_words_per_topic]

# Extract the topic distribution for each document in the model

topic_distributions = [doc.get_topic_dist() for doc in best_mdl.docs]

# Create a DataFrame

tdf = pd.DataFrame(topic_distributions, columns=topic_names)

# Create new DataFrame with the topics

df_combo = pd.concat([df, tdf], axis=1)

df_combo.sample(3)| DOI | Source title | Year | Authors | Title | Abstract | care_women_medical_health | violence_police_crime_gun | poverty_economic_wealth_financial | racial_ethnic_white_whites | ... | survey_respondents_surveys_information | political_attitudes_support_public | relationship_relationships_quality_conflict | case_power_historical_claims | movement_collective_movements_media | organizations_organizational_institutional_organization | children_family_parents_families | interviews_work_practices_drawing | sexual_identity_gay_sex | cultural_culture_capital_consumption | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 9479 | 10.1177/0049124120986208 | Sociological Methods and Research | 2023 | Kalmijn M. | Are National Family Surveys Biased toward the ... | Virtually, all large-scale family surveys in t... | 0.000313 | 0.000473 | 0.000654 | 0.000867 | ... | 0.174660 | 0.000707 | 0.000685 | 0.061151 | 0.000879 | 0.001060 | 0.244236 | 0.004237 | 0.000376 | 0.000569 |

| 5332 | 10.1177/0190272519843303 | Social Psychology Quarterly | 2019 | Heath C.; Mondada L. | Transparency and Embodied Action: Turn Organiz... | Institutional settings in which large numbers ... | 0.000373 | 0.000565 | 0.000781 | 0.001035 | ... | 0.001081 | 0.042321 | 0.000818 | 0.017684 | 0.001049 | 0.277780 | 0.001169 | 0.184792 | 0.000449 | 0.014505 |

| 8730 | 10.1177/07352751221076863 | Sociological Theory | 2022 | Monk E.P., Jr. | Inequality without Groups: Contemporary Theori... | The study of social inequality and stratificat... | 0.000447 | 0.000677 | 0.000936 | 0.001241 | ... | 0.001295 | 0.001011 | 0.017557 | 0.153808 | 0.001258 | 0.001516 | 0.001401 | 0.006063 | 0.000538 | 0.000814 |

3 rows × 46 columns

One way to inspect the topics is to see which topics are most closely associated with each journal. Here, I’m just using the journal mean for each topic, and showing the top few topics The results seem to be pretty consistent with my knowledge of the journals.

for topic in tdf.keys():

# Compute the mean topic values for each source title, sorted in descending order

topic_means = (

df_combo.groupby("Source title")[topic].mean().sort_values(ascending=False)

)

# Get the top five journals

top_journals = topic_means.head(5)

# Print the topic name

print(topic.replace("_", ", "))

# Print the top five journals with the topic value rounded to three decimal places and the source title

for journal, value in top_journals.items():

print(f"* {value:.3f} {journal} ")

# Separator for readability

print("\n---\n")care, women, medical, health

* 0.041 Journal of Health and Social Behavior

* 0.016 Demography

* 0.012 Symbolic Interaction

* 0.011 Gender and Society

* 0.010 Social Problems

---

violence, police, crime, gun

* 0.028 Social Problems

* 0.023 Du Bois Review

* 0.019 Sociological Inquiry

* 0.015 City and Community

* 0.015 Sociological Forum

---

poverty, economic, wealth, financial

* 0.030 City and Community

* 0.027 Social Problems

* 0.025 Demography

* 0.023 Social Currents

* 0.019 Social Forces

---

racial, ethnic, white, whites

* 0.083 Sociology of Race and Ethnicity

* 0.072 Du Bois Review

* 0.036 City and Community

* 0.032 Social Science Research

* 0.030 Social Psychology Quarterly

---

countries, global, economic, world

* 0.043 Theory and Society

* 0.037 American Journal of Sociology

* 0.036 Social Forces

* 0.030 Sociological Forum

* 0.029 Social Science Research

---

immigrants, migration, immigrant, immigration

* 0.027 Sociology of Race and Ethnicity

* 0.025 Social Problems

* 0.025 Demography

* 0.017 Social Forces

* 0.016 Social Science Research

---

network, networks, ties, capital

* 0.208 Social Networks

* 0.034 Social Psychology Quarterly

* 0.031 Sociological Science

* 0.029 American Sociological Review

* 0.028 American Journal of Sociology

---

sociology, science, work, sociological

* 0.070 Sociological Theory

* 0.069 Theory and Society

* 0.068 Symbolic Interaction

* 0.062 Sociological Methods and Research

* 0.044 Poetics

---

time, mothers, work, care

* 0.056 Journal of Marriage and Family

* 0.039 Gender and Society

* 0.031 Work and Occupations

* 0.015 Social Forces

* 0.014 Demography

---

environmental, climate, change, risk

* 0.020 Social Currents

* 0.018 Sociological Inquiry

* 0.013 Sociological Perspectives

* 0.011 Sociological Forum

* 0.010 Social Problems

---

effects, effect, associated, find

* 0.198 Social Science Research

* 0.160 Demography

* 0.155 Social Forces

* 0.153 Journal of Health and Social Behavior

* 0.151 Sociological Science

---

marriage, couples, marital, women

* 0.080 Journal of Marriage and Family

* 0.039 Demography

* 0.015 Journal of Health and Social Behavior

* 0.012 Social Science Research

* 0.010 Social Forces

---

status, class, groups, group

* 0.035 Social Psychology Quarterly

* 0.033 Poetics

* 0.027 Social Networks

* 0.024 American Journal of Sociology

* 0.024 Sociological Science

---

model, models, methods, method

* 0.273 Sociological Methods and Research

* 0.105 Social Networks

* 0.053 Sociological Science

* 0.042 Demography

* 0.029 Social Psychology Quarterly

---

labor, market, employment, job

* 0.091 Work and Occupations

* 0.021 Social Forces

* 0.018 American Sociological Review

* 0.018 Social Science Research

* 0.016 Social Currents

---

school, schools, students, achievement

* 0.154 Sociology of Education

* 0.026 Sociological Science

* 0.023 Social Science Research

* 0.019 Social Forces

* 0.018 Sociological Perspectives

---

young, life, youth, adults

* 0.075 Journal of Health and Social Behavior

* 0.050 Journal of Marriage and Family

* 0.031 Social Science Research

* 0.030 Demography

* 0.022 Sociological Perspectives

---

wage, workers, occupational, earnings

* 0.087 Work and Occupations

* 0.027 American Sociological Review

* 0.023 Social Forces

* 0.018 Gender and Society

* 0.017 American Journal of Sociology

---

state, policy, incarceration, legal

* 0.048 Du Bois Review

* 0.038 American Journal of Sociology

* 0.034 Social Problems

* 0.029 American Sociological Review

* 0.025 Theory and Society

---

trust, perceptions, people, behavior

* 0.134 Social Psychology Quarterly

* 0.042 Sociological Science

* 0.040 Social Science Research

* 0.037 American Sociological Review

* 0.034 Social Networks

---

neighborhood, urban, neighborhoods, segregation

* 0.183 City and Community

* 0.030 Social Science Research

* 0.029 Du Bois Review

* 0.029 American Journal of Sociology

* 0.026 Demography

---

theory, processes, understanding, process

* 0.243 Sociological Theory

* 0.174 Theory and Society

* 0.147 Mobilization

* 0.139 American Journal of Sociology

* 0.133 American Sociological Review

---

college, students, education, student

* 0.088 Sociology of Education

* 0.022 Sociological Perspectives

* 0.020 Sociology of Race and Ethnicity

* 0.019 Sociological Forum

* 0.017 Social Currents

---

racial, black, white, race

* 0.127 Sociology of Race and Ethnicity

* 0.102 Du Bois Review

* 0.038 Sociological Inquiry

* 0.036 Social Problems

* 0.026 Sociological Perspectives

---

mortality, fertility, birth, age

* 0.099 Demography

* 0.022 Journal of Health and Social Behavior

* 0.013 Journal of Marriage and Family

* 0.011 Sociological Science

* 0.010 Social Science Research

---

education, inequality, educational, income

* 0.047 Sociology of Education

* 0.044 Sociological Science

* 0.043 Demography

* 0.037 Social Forces

* 0.034 Social Science Research

---

religious, religion, moral, muslim

* 0.018 Social Currents

* 0.015 Qualitative Sociology

* 0.015 Sociological Forum

* 0.013 Social Science Research

* 0.013 Sociological Theory

---

women, gender, men, gendered

* 0.124 Gender and Society

* 0.034 Social Currents

* 0.029 Work and Occupations

* 0.024 Sociological Perspectives

* 0.024 Journal of Marriage and Family

---

health, mental, physical, status

* 0.146 Journal of Health and Social Behavior

* 0.030 Demography

* 0.023 Work and Occupations

* 0.021 Social Science Research

* 0.020 Social Psychology Quarterly

---

changes, time, change, period

* 0.100 Demography

* 0.067 Sociological Science

* 0.056 Social Forces

* 0.051 American Sociological Review

* 0.047 Social Science Research

---

survey, respondents, surveys, information

* 0.127 Sociological Methods and Research

* 0.037 Social Networks

* 0.024 Sociological Science

* 0.020 Social Psychology Quarterly

* 0.020 Demography

---

political, attitudes, support, public

* 0.038 Du Bois Review

* 0.032 Sociological Forum

* 0.032 Mobilization

* 0.026 Sociological Inquiry

* 0.025 Social Currents

---

relationship, relationships, quality, conflict

* 0.083 Journal of Marriage and Family

* 0.032 Social Psychology Quarterly

* 0.027 Journal of Health and Social Behavior

* 0.017 Social Science Research

* 0.016 Work and Occupations

---

case, power, historical, claims

* 0.201 Theory and Society

* 0.181 Sociological Theory

* 0.113 Symbolic Interaction

* 0.102 Qualitative Sociology

* 0.092 Du Bois Review

---

movement, collective, movements, media

* 0.243 Mobilization

* 0.050 Sociological Forum

* 0.037 Social Problems

* 0.036 Social Currents

* 0.033 Qualitative Sociology

---

organizations, organizational, institutional, organization

* 0.058 Work and Occupations

* 0.047 American Journal of Sociology

* 0.043 Theory and Society

* 0.038 American Sociological Review

* 0.037 Social Networks

---

children, family, parents, families

* 0.138 Journal of Marriage and Family

* 0.052 Demography

* 0.026 Social Science Research

* 0.025 Journal of Health and Social Behavior

* 0.023 Sociology of Education

---

interviews, work, practices, drawing

* 0.305 Symbolic Interaction

* 0.247 Qualitative Sociology

* 0.195 Gender and Society

* 0.149 Sociological Forum

* 0.146 Social Problems

---

sexual, identity, gay, sex

* 0.032 Gender and Society

* 0.020 Symbolic Interaction

* 0.019 Social Currents

* 0.017 Sociological Inquiry

* 0.016 Sociological Perspectives

---

cultural, culture, capital, consumption

* 0.165 Poetics

* 0.027 Sociological Theory

* 0.024 Theory and Society

* 0.019 Symbolic Interaction

* 0.018 Sociological Inquiry

---

Finally, I flip it around, and print each journal and the topics most associated with it.

def print_top_topics(journal, topic_means):

print(f"Journal: {journal}")

top_topics = topic_means.loc[journal].sort_values(ascending=False).head(5)

for topic, value in top_topics.items():

print(f"{value:.3f}: {topic.replace('_', ', ')}")

print("\n---\n")

journal_topic_means = df_combo.groupby("Source title")[tdf.keys()].mean()

for journal in journal_topic_means.index:

print_top_topics(journal, journal_topic_means)Journal: American Journal of Sociology

0.139: theory, processes, understanding, process

0.092: effects, effect, associated, find

0.078: interviews, work, practices, drawing

0.069: case, power, historical, claims

0.047: organizations, organizational, institutional, organization

---

Journal: American Sociological Review

0.133: theory, processes, understanding, process

0.111: effects, effect, associated, find

0.071: interviews, work, practices, drawing

0.051: changes, time, change, period

0.048: case, power, historical, claims

---

Journal: City and Community

0.183: neighborhood, urban, neighborhoods, segregation

0.133: interviews, work, practices, drawing

0.105: theory, processes, understanding, process

0.092: effects, effect, associated, find

0.065: case, power, historical, claims

---

Journal: Demography

0.160: effects, effect, associated, find

0.100: changes, time, change, period

0.099: mortality, fertility, birth, age

0.066: theory, processes, understanding, process

0.052: children, family, parents, families

---

Journal: Du Bois Review

0.104: theory, processes, understanding, process

0.102: racial, black, white, race

0.098: interviews, work, practices, drawing

0.092: case, power, historical, claims

0.076: effects, effect, associated, find

---

Journal: Gender and Society

0.195: interviews, work, practices, drawing

0.124: women, gender, men, gendered

0.099: theory, processes, understanding, process

0.055: case, power, historical, claims

0.052: effects, effect, associated, find

---

Journal: Journal of Health and Social Behavior

0.153: effects, effect, associated, find

0.146: health, mental, physical, status

0.092: theory, processes, understanding, process

0.075: young, life, youth, adults

0.052: interviews, work, practices, drawing

---

Journal: Journal of Marriage and Family

0.139: effects, effect, associated, find

0.138: children, family, parents, families

0.083: relationship, relationships, quality, conflict

0.080: marriage, couples, marital, women

0.062: theory, processes, understanding, process

---

Journal: Mobilization

0.243: movement, collective, movements, media

0.147: theory, processes, understanding, process

0.102: interviews, work, practices, drawing

0.081: effects, effect, associated, find

0.074: case, power, historical, claims

---

Journal: Poetics

0.165: cultural, culture, capital, consumption

0.132: theory, processes, understanding, process

0.122: interviews, work, practices, drawing

0.090: effects, effect, associated, find

0.089: case, power, historical, claims

---

Journal: Qualitative Sociology

0.247: interviews, work, practices, drawing

0.125: theory, processes, understanding, process

0.102: case, power, historical, claims

0.037: sociology, science, work, sociological

0.034: effects, effect, associated, find

---

Journal: Social Currents

0.116: effects, effect, associated, find

0.097: interviews, work, practices, drawing

0.089: theory, processes, understanding, process

0.047: case, power, historical, claims

0.044: changes, time, change, period

---

Journal: Social Forces

0.155: effects, effect, associated, find

0.109: theory, processes, understanding, process

0.056: changes, time, change, period

0.045: interviews, work, practices, drawing

0.043: case, power, historical, claims

---

Journal: Social Networks

0.208: network, networks, ties, capital

0.121: theory, processes, understanding, process

0.110: effects, effect, associated, find

0.105: model, models, methods, method

0.042: interviews, work, practices, drawing

---

Journal: Social Problems

0.146: interviews, work, practices, drawing

0.107: theory, processes, understanding, process

0.089: effects, effect, associated, find

0.053: case, power, historical, claims

0.037: movement, collective, movements, media

---

Journal: Social Psychology Quarterly

0.134: trust, perceptions, people, behavior

0.130: theory, processes, understanding, process

0.112: interviews, work, practices, drawing

0.104: effects, effect, associated, find

0.055: case, power, historical, claims

---

Journal: Social Science Research

0.198: effects, effect, associated, find

0.083: theory, processes, understanding, process

0.047: changes, time, change, period

0.040: trust, perceptions, people, behavior

0.034: education, inequality, educational, income

---

Journal: Sociological Forum

0.149: interviews, work, practices, drawing

0.119: theory, processes, understanding, process

0.079: effects, effect, associated, find

0.073: case, power, historical, claims

0.050: movement, collective, movements, media

---

Journal: Sociological Inquiry

0.137: interviews, work, practices, drawing

0.117: effects, effect, associated, find

0.102: theory, processes, understanding, process

0.048: case, power, historical, claims

0.038: racial, black, white, race

---

Journal: Sociological Methods and Research

0.273: model, models, methods, method

0.127: survey, respondents, surveys, information

0.124: theory, processes, understanding, process

0.096: effects, effect, associated, find

0.064: case, power, historical, claims

---

Journal: Sociological Perspectives

0.129: effects, effect, associated, find

0.106: interviews, work, practices, drawing

0.102: theory, processes, understanding, process

0.043: case, power, historical, claims

0.032: changes, time, change, period

---

Journal: Sociological Science

0.151: effects, effect, associated, find

0.099: theory, processes, understanding, process

0.067: changes, time, change, period

0.053: model, models, methods, method

0.044: education, inequality, educational, income

---

Journal: Sociological Theory

0.243: theory, processes, understanding, process

0.181: case, power, historical, claims

0.129: interviews, work, practices, drawing

0.070: sociology, science, work, sociological

0.036: effects, effect, associated, find

---

Journal: Sociology of Education

0.154: school, schools, students, achievement

0.132: effects, effect, associated, find

0.094: theory, processes, understanding, process

0.088: college, students, education, student

0.074: interviews, work, practices, drawing

---

Journal: Sociology of Race and Ethnicity

0.130: interviews, work, practices, drawing

0.127: racial, black, white, race

0.108: theory, processes, understanding, process

0.083: racial, ethnic, white, whites

0.073: effects, effect, associated, find

---

Journal: Symbolic Interaction

0.305: interviews, work, practices, drawing

0.125: theory, processes, understanding, process

0.113: case, power, historical, claims

0.068: sociology, science, work, sociological

0.035: effects, effect, associated, find

---

Journal: Theory and Society

0.201: case, power, historical, claims

0.174: theory, processes, understanding, process

0.130: interviews, work, practices, drawing

0.069: sociology, science, work, sociological

0.043: organizations, organizational, institutional, organization

---

Journal: Work and Occupations

0.110: theory, processes, understanding, process

0.109: interviews, work, practices, drawing

0.108: effects, effect, associated, find

0.091: labor, market, employment, job

0.087: wage, workers, occupational, earnings

---

The “theory, processes, understanding” shows up in the top five for every journal, but there some variations showing both the substantive topic, such as “neighborhood, urban, neighborhoods, segregation” or “school, schools, students, achievement.”

Overall, pretty good. When the work, I think topic models are a good way to identify major themes in a set of texts, but aren’t great for capturing more latent aspects of the data.

Ideas for further development: * Diagram to show how topics split when you increase k. * Use ChatGPT to evaluate the coherence of the topics to automate a word intruder approach. * Use ChatGPT to compare the coherence of Gibbs vs variational inference.